Note: Not a live demo, server configuration is just for example. For live working example try the Dolby.io Publisher and Subscriber example. Or AWS Kinesis Publisher and Subscriber example.

This example demonstrates dynamic virtual background removal using a green screen to render a mask. Rendering is applied using efficient graphics rendering.

CPU usage is incurred obtaining the virtual stream for publishing due to software encoding in Chrome therefore the resolution needs to be restricted to 720p.

Backgrounds can be applied using the bgImage or backgroundColor config on the publisher.

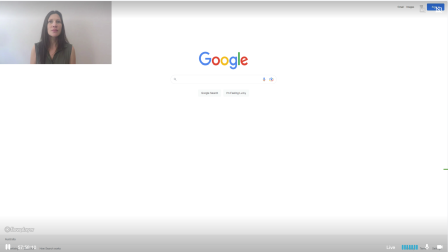

With screen toggling enabled, wen switching to screenshare the screenshare video will be rendered as a GPU background texture, the video will be rendered in the foreground resized.

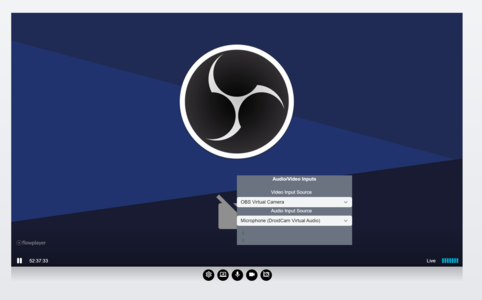

An api is provided to toggle enabling the virtual rendering and updating the background image, color texture or chromakey keycolor. The render type can be toggled between 1 for mediapipe and 2 for chromakey or empty argument for off.

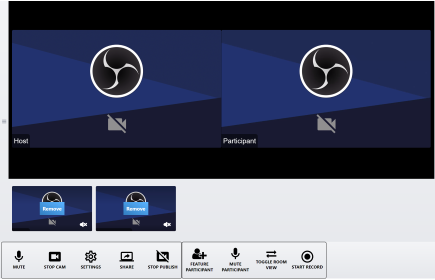

Virtual background is supported in webrtc publishing or web conferencing features.

The maxHeight is to restrict the canvas rendering stream dimensions. Canvas capture streams are software encoding and increases cpu of top of the webgl rendering solution. In 1080p the cpu usage doubles, 720p seems more efficient.

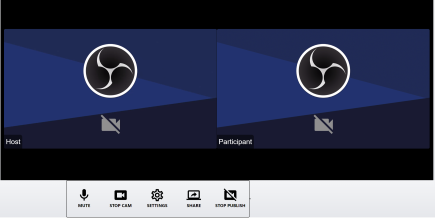

Virtual Screen Sharing

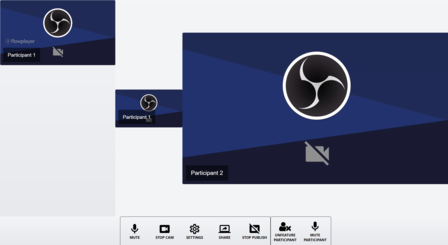

With share screen enabled with toggledScreen in webrtc publishing and virtual enabled. A share screen virtual mixer with camera input will be enabled.

In web conferencing. Screen publishing is seperate and will not be mixed and only the stream of the virtual rendering will be switched to.

The scaledWidth configuration is a percentage of scaling the video texture to the screen share texture background.

<div class="flex w-full">

<div id="chromakey" class=""></div>

</div>

<script type="text/javascript">

var player = flowplayer("#chromakey", {

"clip": {

"live": true,

"sources": [

{

"appName": "webrtc",

"src": "myStream",

"type": "application/webrtc"

}

]

},

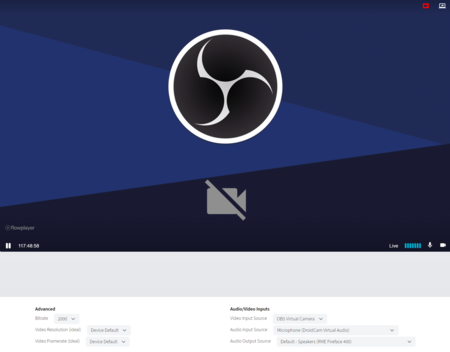

"rtc": {

"applicationName": "webrtc",

"autoStartDevice": true,

"publishToken": "eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJ3ZWJydGMifQ.MjehxjweF5tPPqUJQjHEHdHLz4sjaXkTkJh0dkM8w9_M5PMKoyzUJZPYyYtgT1qn17eDZlUOUeIeyr37z-KhN1u66L2ScVCwvs0dBjf6s2ZSIw0shvKmCdq7u5bd5llWTY0FDbbtFA1l60CkfOsTd0_dQpeyKm3Y94XgIaPyJB_PCCezO8V1xmcyMT1aqPfwr99AmM8s_P_8nuDL6A1HHppImwZL550AnTjuPQaAMRSVSNuzlLrwFXBA1SRaKOa2AVkIzP0tYkqWCYd03Gvn_CpxZ5dhk5s4UYSoYeK2FX4nz4khn_k8loFO-vDu2M-1r7dvFXnt8iNYWGTzDxPNuQ",

"publisher": true,

"server": "wowza",

"serverURL": "rtc.electroteque.org",

"toggleScreen": true,

"userData": {

"param1": "value1"

},

"virtual": {

"backgroundColor": "000000",

"bgImage": "../../images/virtualbg.jpg",

"keyColor": "00ff00",

"maxHeight": 720,

"renderType": 2,

"scaledWidth": 0.25

}

},

"share": false

});

player.on("devices", (event, devicesMap, deviceInfos) => {

//get available devices here for building your own UI

console.log("devices", devicesMap, deviceInfos);

}).on("initdevices", () => {

console.log("init devices");

}).on("mediastart", (e, info, deviceInfo, videoConstraints, capabilities) => {

//console.log("mediastart111", deviceInfo);

//selected device has been activated

console.log("Device Start ", info.deviceInfo);

console.log("Updated Device Info ", info.newDeviceInfo);

console.log("Video Constraints ", info.videoConstraints);

console.log("Capabilities ", info.capabilities);

}).on("mediastop", (e) => {

console.log("Device Stop");

}).on("devicesuccess", (e) => {

console.log("Device Permissions Success");

}).on("deviceerror", (e, error) => {

console.log("Device Error", error);

}).on("startpublish", (e, supportsParams) => {

console.log("Publishing Started");

//use this for enabling / disabling bandwidth select menus while publishing

//browsers that don't support it cannot update bitrate controls while publishing

console.log("Browser Supports peer setParameters ", supportsParams);

}).on("stoppublish", (e) => {

console.log("Publishing Stopped");

}).on("recordstart", (e) => {

console.log("Recording Started");

}).on("recordstop", (e) => {

console.log("Recording Stopped");

}).on("sendmessage", (e, message) => {

console.log("Signal Server Send Message: ", message);

}).on("gotmessage", (e, message) => {

console.log("Signal Server Receive Message: ", message);

}).on("offer", (e, offer) => {

console.log("WebRTC Offer ", offer.sdp);

}).on("answer", (e, answer) => {

console.log("WebRTC Answer ", answer.sdp);

}).on("bitratechanged", (e, params) => {

console.log("WebRTC Bitrate Changed ", params);

}).on("outputsuccess", (e, sinkId) => {

console.log("Success, audio output device attached:" + sinkId);

}).on("outputerror", (e, message) => {

console.log(message);

}).on("ready", function(e, api, video) {

console.log("READY", video);

}).on("data", (e, data) => {

console.log("GOT DATA: ", data);

}).on("rtcerror", (e, error) => {

console.log("WebRTC Error ", error);

}).on("screensharestart", (e) => {

console.log("screen share started");

}).on("screensharestop", (e) => {

console.log("screen share stopped");

});

</script>